What is Accessibility Testing and Why is it Important?

In 2024, the World Health Organization estimated that over 1.3 billion people live with some form of disability. That’s roughly one in six of us.

Now imagine you launch a website in this digital age, and instantly, millions of those users are excluded from accessing it because it doesn’t work with a screen reader or one cannot access it properly without a mouse.

That’s where accessibility testing comes in.

It’s a branch of software testing where you focus on finding and fixing barriers in the way your site or app works for everyone with a visual, hearing, motor, or cognitive impairment.

Earlier companies used to treat it as a “nice to have,” but that’s no longer the case. Laws like WCAG, Section 508, and the European Accessibility Act make accessibility a requirement. Now, you’ll also find online accessibility testing tools and free EAA audits that catch accessibility problems early instead of scrambling after launch.

In this blog, we’ll cover the key standards you need to know, the different types of tests, accessibility testing best practices, the tools that make it easier, common challenges you’ll face, and where accessibility trends are heading next.

Let’s begin!

Accessibility Standards and Compliance Laws

When you define accessibility for websites and apps, it means designing in a way everyone can use them, no matter if they have a disability or not. The most common standard for this is WCAG—the Web Content Accessibility Guidelines. WCAG explains exactly what “accessible” looks like in practice.

WCAG is built on four simple ideas, called the POUR principles:

Perceivable: Make sure people can take in your content in more than one way. Add alt text to images, captions to videos, and transcripts to audio.

Operable: All actions should work with a keyboard, not just a mouse or touch screen.

Understandable: Keep language, navigation, and layouts simple enough that users know what to expect.

Robust: Build so your site works with today’s assistive tools, like screen readers, and can handle future ones too.

WCAG has three levels:

A: The basics to remove the most obvious barriers.

AA: Level most laws and organisations aim for.

AAA: The strictest level, not always realistic for every page or feature.

WCAG isn’t the only rulebook.

In the US, the ADA applies to websites and apps for businesses serving the public. Section 508 requires government sites and their contractors to meet accessibility standards.

In Europe, EN 301 549 is the benchmark. The EAA makes accessibility mandatory for many online services in 2025.

The point isn’t just avoiding fines or lawsuits. When you meet these standards, you send a clear signal that their needs matter. That’s why many teams have started bringing in a website accessibility testing company to build accessibility checks right into their web development process from the start.

What Types of Disabilities Should Be Considered in Accessibility Testing?

If you are planning for accessibility testing, first understand the five broad categories in which these are usually categorized based on disabilities. Each one is based on what you test for and the tools you use.

| Disability Type | What It Includes | Key Testing Focus |

|---|---|---|

| Visual impairments | Partial or full blindness, low vision, colour blindness | Check contrast ratios, text scaling, test screen reader accessibility, ensure navigation works without sight |

| Auditory impairments | Partial or full hearing loss | Provide captions for videos, transcripts for audio, visual alerts for sounds |

| Motor impairments | Conditions limiting fine motor control or mobility | Ensure full keyboard access, clear focus indicators, large enough clickable/touch targets |

| Cognitive impairments | Memory, attention, problem-solving, and reading challenges | Use plain language, consistent layouts, and avoid overly complex workflows |

| Speech impairments | Situations where voice input isn’t possible or reliable | Offer alternative input methods for all voice-only features |

When you map every test case to its related category, you ensure that you don’t just check boxes but actually cover the real-world barriers people face when they use web apps.

How Can You Run Accessibility Testing, and Which Method Works Best?

You can approach web accessibility testing in three main ways, and the right choice depends on your goals and timeline.

Manual testing

You test the site the way a user would. Navigate with only a keyboard. Zoom the page and watch how the layout changes. Turn on a screen reader and try completing a task. Manual work catches things scanners miss, like vague link text or confusing error messages.

If you’re learning how to test accessibility of a website, this is the best place to start.

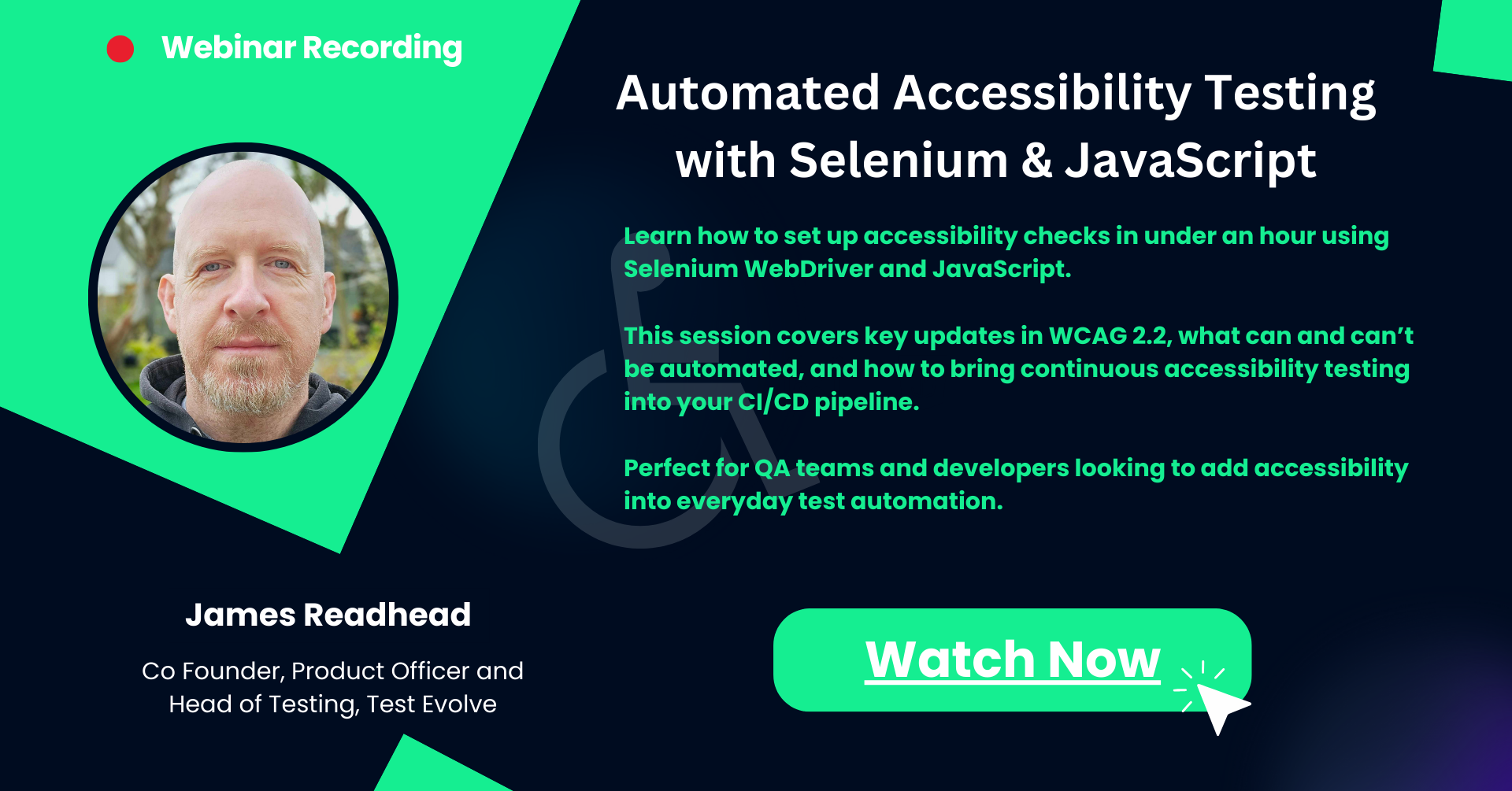

Automated testing

You run tools that scan pages for known issues: missing alt text, poor colour contrast, and bad heading order. With test automation, these checks run on every build and alert you to regressions before they ship. Automation is quick and repeatable, but it won’t catch everything.

Hybrid approach

You mix the two. Let automation run quick scans on every page to catch obvious issues before they pile up. Then step in and test the areas that need human judgement, i.e., things like link clarity, form error handling, or how easy it is to complete a task with a screen reader.

This way, you cover more ground in less time and still catch the problems that tools miss. Teams often bring in an accessibility testing agency when they need extra expertise or want to embed this workflow into their release cycle.

What Assistive Technologies and Tools Should You Know for Accessibility Testing?

Testing with real assistive tech shows you how users experience your site, not just what your code claims to do. Each tool in your kit uncovers different issues, so you need to know what they do well and where they fall short.

Screen readers (NVDA, JAWS, VoiceOver)

NVDA is free and widely used on Windows, making it ideal for teams starting on a budget. JAWS is still common in enterprise and education environments, especially where licensing is already in place. VoiceOver comes built into macOS and iOS, so it’s the default for many Apple users. Testing with at least two of these helps catch differences in how they handle forms, ARIA roles, and dynamic content. It's a common gap in accessibility checkers for website scans.

Screen magnifiers

Windows Magnifier and ZoomText are the most referenced in usage surveys. They reveal layout problems that don’t show up at 100% zoom, like overlapping elements or clipped buttons. This is where examples of accessibility work in CSS and responsive design get tested in real conditions.

Speech recognition software

Dragon NaturallySpeaking and built-in OS dictation let users operate an interface entirely by voice. They depend on accurate, visible labels and consistent navigation landmarks. Missing these forces users into workarounds.

Automated checkers (axe, WAVE, Lighthouse, Pa11y)

Axe is favoured in CI pipelines for its API and rule accuracy. WAVE is strong for quick visual reviews, showing exactly where problems appear on a page. Lighthouse’s accessibility score is useful for trend tracking but misses complex interaction issues. It’s one of the reasons your references stressed “what is not checked when you run the accessibility checker”. Pa11y runs headless, making it easy to integrate into automation scripts and nightly builds.

Browser developer tools for accessibility

Chrome, Firefox, and Edge ship with built-in audit panels, contrast checkers, and ARIA inspectors. They’re best for quick, targeted checks when debugging, not for full compliance testing.

Pair automated scans with manual sessions using real assistive tech, and you’ll find issues no single method can expose. That’s where a strong software testing tutorial or hands-on training with an experienced tester pays off.

Get Started With This Step-By-Step Accessibility Testing Process

An effective accessibility testing process builds on what you’ve already learnt about WCAG standards, disability categories, testing types, and assistive technologies. Here’s how to put all of it into action.

Step 1. Planning

Define the scope: Decide whether you’re auditing a single flow, a group of templates, or the entire site.

Set the target: Confirm if you’ll check website accessibility against WCAG Level AA (legal minimum in most regions) or push for AAA in high-value areas like checkout or booking.

Prioritise by impact: Use your analytics and user feedback to identify high-traffic pages or features that would create the biggest barriers if they failed accessibility.

Include accessibility in design handoff: Bring designers into this step. They will review colour contrast, focus order, and heading structure before development begins.

Step 2. Selecting Tools and Assistive Tech

You’ve already seen in the Assistive Technologies section which tools cover which needs.

Pick a balanced mix: at least one screen reader, one magnifier, and one automated scanner.

Configure automated accessibility testing in your CI/CD environment to run with every code commit.

Match tools to platform: VoiceOver for Mac/iOS-heavy user bases, TalkBack for Android, and NVDA/JAWS for Windows.

Step 3. Executing Tests

Manual pass: Follow the flows from your “Types of Disabilities” table and simulate each need — e.g., navigate entirely by keyboard, zoom to 200%, complete a form with a screen reader.

Automation run: Trigger your CI/CD scans to cover the entire codebase. These catch quick, rule-based issues before they pile up.

Human + machine: Always run automation before manual testing to clear easy issues so testers spend time on context and usability.

Step 4. Reporting Findings

Map each issue to the specific WCAG criterion it fails.

Rate severity by legal risk + user impact.

Add enough context for developers to reproduce and fix the issue without guesswork, i.e. screenshots, code snippets, and expected behaviour.

Step 5. Remediation and Retesting

Verify fixes using the same method that detected the issue in the first place.

Add resolved issues to your regression suite so they get checked automatically in future builds.

Treat accessibility as part of your test automation tutorial training for QA and dev teams so knowledge doesn’t live in a single role or person.

What’s the Best Way to Integrate Accessibility Testing into Agile and DevOps?

| Workflow Area | What to Do | Tools / Methods | Why It Works |

|---|---|---|---|

| Build Integration | Add an accessibility scan job to the CI/CD pipeline to test accessibility on every pull request or merge. Fail the build for high-severity issues. | axe-core CLI, Pa11y CI, Lighthouse CI, GitHub Actions, GitLab CI, Jenkins | Stops inaccessible code from reaching production. |

| Shift-Left in Sprints | Include accessibility acceptance criteria in every story. Designers check colour contrast, devs run local scans before committing code. | Contrast checkers, keyboard navigation tests, ARIA inspection | Catches issues before QA sees the build, reducing rework. |

| Targeted Component Audits | Use accessibility testing services during development for complex UI components (modals, date pickers, carousels). Reuse the compliant version across projects. | Manual assistive tech testing, component library validation | Solves recurring issues early and at scale. |

| Results Tracking | Feed manual + automated results into Integration Pipelines dashboards alongside other quality metrics. | CI/CD reports, Jira/issue tracker integration, quality dashboards | Makes accessibility progress visible and measurable across sprints. |

Also read: Agile Testing: Ensuring Quality in Every Sprint

How Can Teams Overcome Accessibility Testing Challenges With Test Evolve?

Accessibility testing sounds simple on paper, i.e. run a scan, fix issues, and move on. But in practice, teams hit roadblocks that stall progress. Here are the biggest ones we see and how high-performing QA teams deal with them:

Noise from Automated Scanners

Automated tools like axe or WAVE are great, but they at times return 200+ issues in one sweep. Sometimes they are false positives (e.g., ARIA landmarks flagged even when they meet WCAG). Teams waste hours triaging, which can slow down a release.

Fix: Run automation inside your integration pipelines, but layer it with manual prioritisation. Test Evolve helps here by embedding axe checks directly into regression runs, giving you repeatable results linked to your CI/CD.

Lack of Specialist Knowledge

Many QA testers understand Selenium or Cypress but not the nuances of accessibility principles like 'perceivable' or 'operable'. This leads to missed problems, e.g., keyboard traps slip by because no one’s trained to test without a mouse.

Fix: Pair manual testing with guided playbooks. Some companies run “accessibility bug bashes” with both testers and developers. Test Evolve can combine automated reports with screenshots and visual regression outputs, making those sessions easier.

Conflicting Priorities in Agile Sprints

Product managers often push for speed, so accessibility checks are delayed until the last stage of release. At that stage, fixing issues is 5–10x costlier.

Fix: Bake accessibility into your “Definition of Done”. Instead of running a website accessibility test once per quarter, make it part of daily builds. Test Evolve allows accessibility checks to run with performance and functional tests in the same suite.

Device and Assistive Tech Fragmentation

Passing WCAG on Chrome doesn’t mean your app works in VoiceOver on iOS or NVDA on Windows. Teams struggle to test this breadth.

Fix: Use a combination of browser/device farms (e.g., BrowserStack, Sauce Labs) and exploratory sessions with assistive tools. Test Evolve integrates with these platforms so you can validate accessibility across many environments without separate workflows.

Stakeholder Buy-In

Business leaders sometimes see accessibility as a compliance tick-box, not a necessity. That means testers don’t get the time or budget to go deep.

Fix: Treat accessibility bugs like core software issues with real ROI impact, that is, avoiding lawsuits, reaching wider audiences, and even improving SEO. With Test Evolve, reporting dashboards make it simple to show how accessibility ties directly to product value. Plus, you can try it free for 30 days, making it a cost-effective way to get started.

Closing the Gap Between Challenge and Practice

Accessibility testing is about changing how teams think about quality. False positives, knowledge gaps, and sprint pressure will always exist, but when accessibility is treated as part of core QA, not an afterthought, those hurdles will shrink.

Embed accessibility checks into integration pipelines and add automation with human review, and see how teams build products that are usable by everyone and not just compliant on paper.

Also Read: 8 Major Mobile Testing Challenges & Their Fixes

What’s Next for Accessibility Testing?

Accessibility is no longer optional. Legal frameworks and market pressures are pushing for fundamental shifts in how teams build and test digital products.

Major Change 1: Enforcement under the European Accessibility Act (EAA)

The EAA became enforceable on June 28, 2025; and significantly, this extends beyond new products and services. From that date forward, newly released offerings must be accessible immediately. Legacy products and services have until 2030 to comply.

This staged approach means:

Teams can't treat accessibility as a one-off checkbox.

Procurement and vendor contracts increasingly demand proof of ongoing compliance.

Audits will require continuous, documented results, not just a snapshot during final testing.

Major Change 2: Legal pressure in the U.S. escalates

Digital accessibility litigation remains high, with 8,800 ADA Title III complaints filed in federal courts in 2024. It's a 7% increase from 2023.

Some states continue to see deep activity into 2025: 457 lawsuits were filed in March alone, especially concentrated in New York, Florida, and California.

This steady volume underlines that even partial compliance can leave teams exposed and that continuous accessibility testing is increasingly a business risk, not a technical nice-to-have.

Major Change 3: Smarter AI tools and their limits

AI-powered accessibility scanning is growing more sophisticated, catching nuanced issues like meaningless alt text or contrast glitches.

But without human validation, AI can’t assess usability or context. The most forward-looking teams use AI to flag problems quickly, then tackle them with expert oversight and manual testing, a hybrid that balances scale and accuracy.

Major Change 4: Overlays under scrutiny

Accessibility overlays promise quick fixes, but growing scrutiny of their effectiveness reveals real risks.

Many rely on superficial pixel shifts rather than fixing underlying code, and they can break screen reader workflows and even mislead compliance auditors. Market consensus is shifting: true accessibility must be built in, not patched on top.

Major Change 5: Inclusive design = smarter, not just safer

The most successful teams are moving from compliance-first to inclusive-by-design. This culture shift enables:

Lower rework costs: accessibility is baked into design tokens and components.

Stronger market trust: accessible products reinforce brand loyalty and reach underserved users.

Future resilience: accessible foundations support emerging interfaces like voice and AI navigation down the road.

As these shifts happen, organisations that adapt early will find accessibility testing less of a hurdle and more of a competitive edge. Request a consultation to find out how your team can also gain this advantage.

Make Accessibility Testing a Standard, Not an Afterthought

With over 1.3 billion people worldwide living with disabilities (WHO), building software that excludes them is a missed opportunity and a business and compliance risk. Beyond regulations like Section 508 and the European Accessibility Act, teams are realising the deeper accessibility testing benefits: better usability for everyone, stronger SEO, and protection against costly lawsuits.

The key takeaway? Accessibility must be woven into your QA strategy. Modern accessibility testing tools make it possible to integrate checks directly into your pipelines, combining automation accessibility testing with thoughtful manual testing where it matters most.

At Test Evolve, we help organisations turn accessibility into a competitive edge. Our platform integrates with leading accessibility engines and supports automation across the web, mobile, and API and gives you dashboards that make ROI measurable. You can even start risk-free:

Try Test Evolve free for 30 days and see how quickly accessibility fits into your current test suite.

Book a free European Accessibility Act audit to understand exactly where your product stands before 2025 enforcement kicks in.

Accessibility testing is about creating digital experiences everyone can use and trust. And with the right partner, like Test Evolve, it’s easier, faster, and more affordable than ever to become both compliant and accessible.

FAQs on Accessibility Testing

-

With accessibility testing, organisations gain confidence about their website or app meeting EAA act and Section 508 requirements. Other than compliance, it also ensures that their websites provide a digital experience to all, no matter people's different abilities to access and engage with their product.

-

Common methods: Automated scanners, screen readers, colour contrast checkers, manual reviews.

Popular tools: WAVE, Lighthouse, Axe

-

Accessibility testing improves usability for everyone. Clear navigation, readable text, and multiple input options make apps and websites easier and more enjoyable to use.

-

The principles of WCAG are Perceivable, Operable, Understandable, and Robust (POUR). They are the core rules for building and testing accessible products.

-

Automation quickly finds common issues like missing ARIA labels. Manual testing checks how real users interact with assistive tech. Using both gives the most accurate results.