Top 6 Cross-Browser Testing Challenges

(and How Modern Teams Solve Them in 2025)

A feature of your website may work fine in Chrome but break in Safari. The layout might look clean on a desktop but misaligned on a tablet. A dropdown may work in one browser version but fail in another.

These bugs are common occurrences representing the types of inconsistencies that teams face on an everyday basis when they try to deliver a stable experience across different browsers.

Teams can catch and resolve such issues using a cross-browser testing strategy. Shortened to CBT, it's simply the process of ensuring that a particular web app behaves the way it is supposed to across different browsers, devices and operating systems. This may include simple UI elements or critical user journeys.

There's no doubt that user expectations are higher than ever. A single visual bug or broken interaction triggers trust loss, a high churn rate, and a high conversion drop-off. So, overlooking a browser version or device type can be costly.

Let’s break down six key cross-browser testing challenges that can delay a successful launch and explore how high-performing teams are solving them with scalable, real-world strategies.

Key Takeaways

Prioritise browsers and devices based on real user data and not guesswork.

Font rendering, DPI scaling, and CSS fallbacks still cause layout bugs on modern browsers.

React hydration, async scripts, and third-party overlays remain high-risk zones for JavaScript failures.

Automation breaks when DOM states change mid-render. Use stable selectors and condition-based waits.

Real devices catch bugs that emulators and simulators miss, especially on mobile.

Cloud tools speed up testing, but results depend on how you configure and maintain the environments.Cross-browser testing reduces late-stage issues and enables QA teams to support faster release cycles with confidence.

Why Does Cross-Browser Testing Still Matter?

Modern web experiences aren't limited to one browser, screen size, or device type. Diversity in rendering engines, accessibility settings, and OS-level behaviours continues to grow, and so do the cross-browser testing challenges that come with it.

Without proper coverage, users will run into broken layouts, sluggish performance, or features that simply don’t work. Users no longer tolerate these issues. They abandon their shopping carts and lose trust in your product.

Effective cross-browser testing software will catch these problems before your users do. It flags browser-specific rendering bugs, surfaces UI glitches, and ensures accessibility for users relying on assistive tech.

The result? A smoother experience that keeps users engaged, improves conversion rates, and supports better search visibility. It also reduces the volume of support tickets caused by preventable compatibility issues.

Thus, cross-browser testing protects user trust and gives teams the confidence to ship across environments.

The Top 6 Cross-Browser Testing Challenges

1. Browser & Device Fragmentation

Testing isn’t limited to a few major browsers anymore. It spans a growing matrix of devices, versions, and environments that change rapidly. For example:

Version overload: Multiple active versions across Chrome, Firefox, Safari, and Edge create dozens of test targets per platform.

Mobile variance: Emulators often miss touch input, screen density, and GPU-related bugs found on real Android and iOS devices.

Legacy requirements: Internal enterprise apps still rely on unsupported browsers like Internet Explorer or legacy Edge.

Regional browser habits: Samsung Internet and UC Browser remain dominant in several markets yet are often skipped in test plans.

Most teams struggle to manage this level of variety unless they actively prioritise which browsers and devices to test and know which ones they can safely ignore.

2. Visual Layout Instability

Designs that look polished in one browser can appear broken in another even when the underlying code is the same.

Fonts and spacing shift due to anti-aliasing, baseline calculations, or OS-level rendering differences.

Media queries and breakpoints behave inconsistently, especially with flexbox or CSS grid layouts.

Missing resets or vendor-prefixed styles cause layout drift in browsers that interpret base styles differently.

Zoom levels, DPI, and accessibility settings often trigger unexpected wrapping or overflow.

These visual issues usually don’t trigger test failures, but they disrupt UX. While online browser testing tools can catch major misalignments, subtle regressions often slip through unless validated on real devices with resolution-aware visual checks.

3. JavaScript and Framework Implementation Discrepancies

Cross-browser testing becomes even more important when applications rely heavily on JavaScript and frontend frameworks. Each browser uses a different JavaScript engine, such as V8 (Chrome), SpiderMonkey (Firefox), and JavaScriptCore (Safari), and their behaviour diverges in subtle but significant ways.

For instance, Safari's delayed ES6 module support and Chrome’s V8 optimisations often cause variation in how code executes, particularly under asynchronous conditions.

Frameworks like React, Angular, and Vue introduce another layer of complexity. Hydration timing, lifecycle hook execution, and DOM updates may behave differently across environments, especially during server-to-client transitions.

This leads to issues like UI flickers, event binding failures, or partially rendered views. Teams often rely on transpilation (e.g. Babel) and polyfills to patch compatibility gaps, but those too can misfire.

Add third-party scripts like analytics or AB testing overlays, and you increase the chances of browser-specific bugs that silently undermine the user experience.

Flaky async flows. Hydration issues. Third-party scripts that break in Safari.

This webinar breaks down what actually goes wrong in JavaScript-heavy apps and how teams are debugging faster with smarter test automation.

Perfect for developers and testers working with React, Angular, or Vue.

James Readhead

Co Founder, Product Officer and Head of Testing, Test Evolve

4. Flaky or Inconsistent Automated Tests

Flaky tests fail unpredictably. They might pass in one run and fail in the next without any code changes. This typically occurs due to asynchronous content, animations, dynamic DOM updates, or delays that aren’t handled in the test logic.

Most test failures happen because the script looks for an element too early or attaches to a DOM node that changes after render. Modals, dropdowns, and lazy-loaded components increase the chance of these false negatives.

Tools like Selenium and WebDriver don’t always wait intelligently. Without explicit waits, retries, and stable selectors, even well-written tests can break, more importantly when cross-browser testing issues expose subtle differences in timing or rendering.

Flakiness adds rework. It slows releases, wastes debugging time, and chips away at trust in the test suite.

5. Maintaining Infrastructure or Access

Getting access to the right browsers and devices isn’t as easy as it sounds. Whether you’re testing locally or using a cloud service, there’s always something that gets in the way.

Establishing an in-house test lab presents significant challenges.

Buying devices, keeping browsers up to date, and maintaining them takes time. And as soon as a new OS version comes out, you’re behind again.

Cloud platforms offer solutions but require their own configuration overhead.

Cloud platforms give you access to lots of browsers and devices, but you still need to configure test environments, deal with account limits, and sometimes work around network restrictions.

Local vs CI vs cloud: they don’t always match.

A test might pass on your machine, fail in the pipeline, and behave differently in the cloud. Keeping all environments consistent is harder than it should be.

Some tools don’t play well with CI/CD.

Not every test tool is built for pipelines. Cross-browser testing that works fine locally can start breaking once you plug it into CI, especially if you’re running in parallel or dealing with login/auth flows.

6. Too Much to Test, Not Enough Time

No team has the time or budget to test everything. Between browser versions, operating systems, screen sizes, and devices, the number of possible combinations grows fast and not all of them matter equally.

Most teams either spread their resources too thinly across too many scenarios or focus too narrowly, missing critical test cases. Without a clear idea of what your users actually use, testing becomes guesswork.

Cross-browser compatibility issues often show up in places you didn’t think to test, like older Android browsers or a minor Safari version on iOS. You can’t test every setup, but you can test the ones that matter most.

That means using real user data, picking high-impact combinations, and being okay with saying no to the rest. Otherwise, your time gets wasted on coverage that doesn’t protect anything.

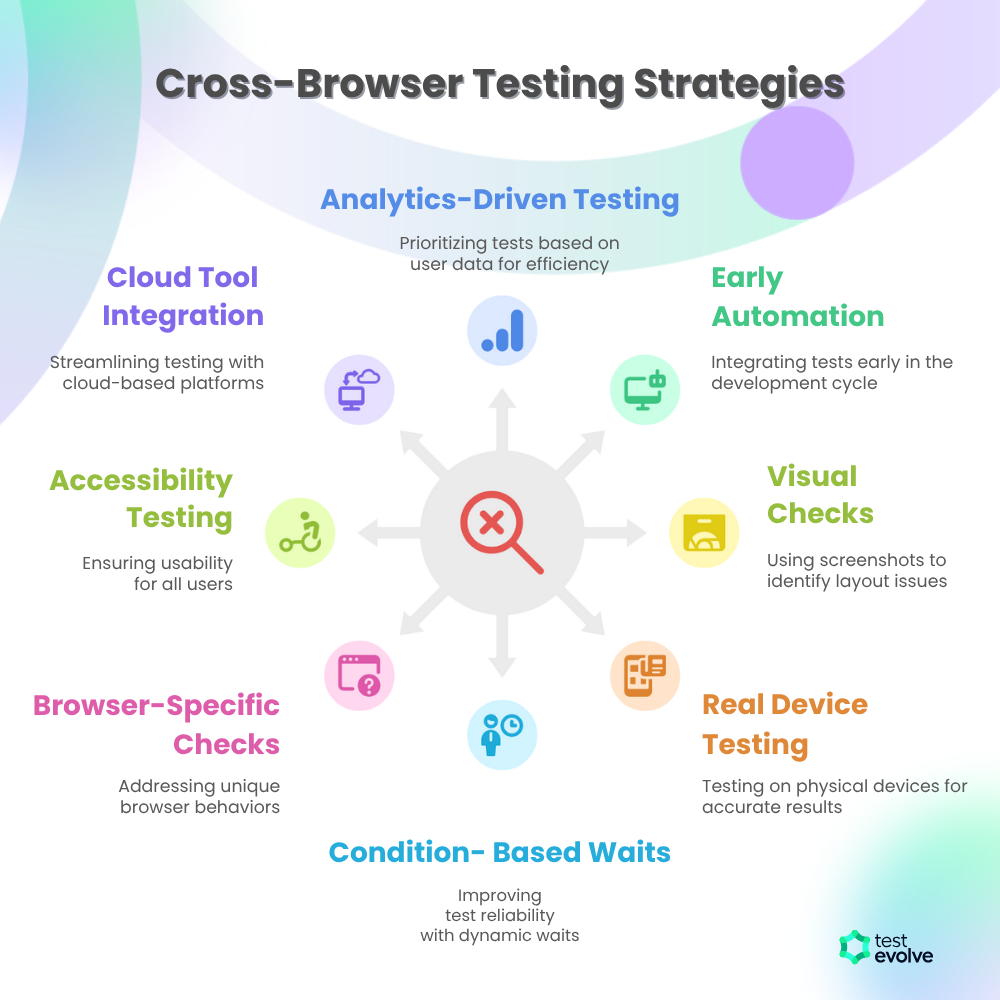

How to Fix Common Cross Browser Testing Problems

Cross-browser testing problems don’t come from lack of effort—they come from testing the wrong things, too late, or with the wrong tools. Here’s how top QA teams stay focused and catch what matters in cross-browser compatibility testing.

Use real analytics to define your test scope: Prioritise the browser–device–OS combos your users actually use. Skip the rest.

Automate early and run tests in parallel: Add critical flows to your test suite early in the sprint. Run them across browsers from day one.

Add visual checks to catch layout bugs: Screenshots and visual diffs catch issues like misaligned buttons, spacing shifts, and hidden elements; the key causes of cross-browser compatibility issues.

Test on real devices during final checks: Physical devices expose bugs with rendering, gestures, and mobile responsiveness that emulators miss.

Use condition-based waits, not fixed delays: Wait for elements to be visible or stable instead of using static timeouts. It improves reliability and speed.

Watch for browser-specific quirks: Know how Safari handles layouts or how Firefox treats inputs. Spot-check known problem areas for your app and framework.

Check basic accessibility across browsers: Run tests for keyboard navigation, screen readers, and contrast handling to make sure no features break for users with assistive tech.

Use cloud tools that plug into CI/CD: Choose platforms that support browser versioning, logs, and live debugging without extra configuration.

Every cross-browser failure can be traced back to something predictable. The more proactive your setup, the less you fix under pressure.

Test Evolve addresses these infrastructure challenges with flexible pricing options, starting at $79 per month.

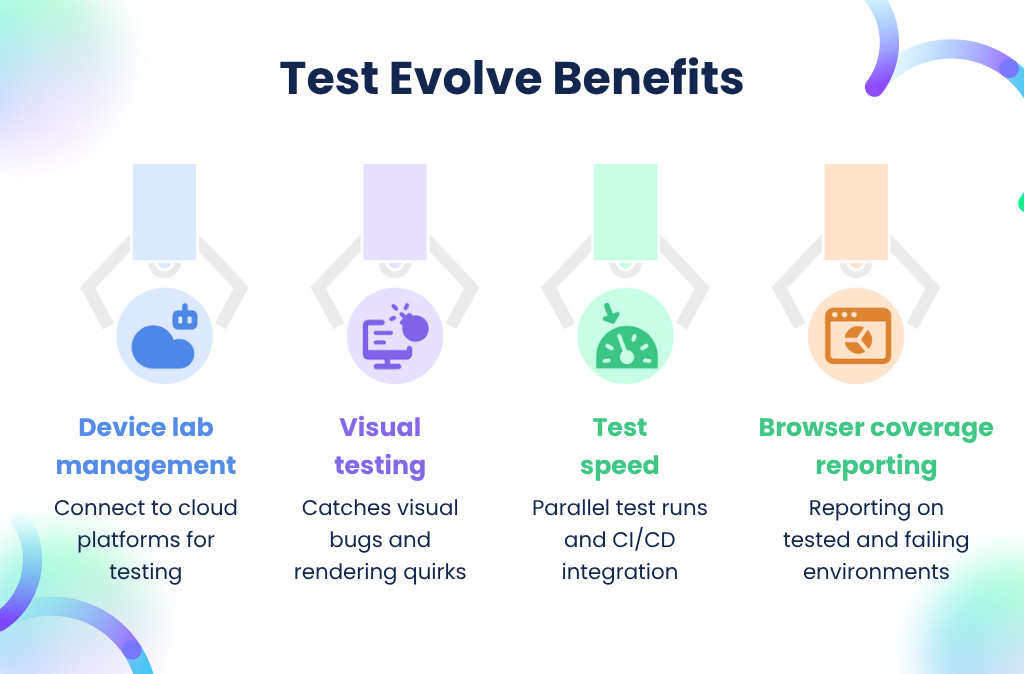

How Does Test Evolve Make Cross Browser Testing And Debugging Easier?

Consider this scenario: You're halfway through a sprint, and a UI change breaks the layout again, but only on Safari. Your test suite runs fine in Chrome, but you’re not confident about Edge. You’re pushing code through staging, but debugging these inconsistencies takes longer than the actual fix.

This is where Test Evolve fits in: Not as another testing framework to learn but as a layer that helps modern QA teams run faster cross-browser testing without sacrificing accuracy.

Here’s how it solves the most common pain points:

No time to manage device labs?

Plug Test Evolve into your existing cloud platforms, such as BrowserStack, Sauce Labs, and LambdaTest, and run tests on real browsers without setting up anything.

Need to catch what visual tests miss?

Visual snapshots are baked into every test run. You’ll catch alignment shifts, layout bugs, or rendering quirks that often cause Cross Browser Compatibility Testing failures.

Are slow tests blocking release?

Test Evolve runs your suites in parallel and integrates with your CI/CD. It doesn’t delay pipelines; it runs alongside them.

Have to explain browser coverage to stakeholders?

Get reporting that maps out what’s tested, what’s failing, and which environments still carry risk. No guesswork, only clarity!

See how Test Evolve supports faster, more reliable cross-browser debugging. With a 30-day free trial, explore our cross-browser testing capabilities today!

Making Cross-Browser Testing Work in 2025

Teams don’t need to chase perfection across every browser. What matters is that the core user journeys, be it forms, layouts or navigation, work where users expect them to.

The 6 top cross-browser testing challenges for 2025, such as flaky automation, layout bugs, and legacy browser quirks, are still a reality. But they’re manageable with the right tools, real usage data, and fast feedback loops.

This comprehensive overview of the top cross-browser testing challenges will help you spot these threats early, reduce rework, protect user trust, and keep your team shipping with confidence. When your testing aligns with how your product evolves, even browser-related issues become easier to catch and control.

Test Evolve supports this shift with clear reports, real device coverage, and tests that hold up across browsers. Try it for free!

Frequently Asked Questions

-

Web elements render differently across browsers due to variations in CSS handling, JavaScript engines, viewport behaviour, and unsupported features. Common issues include layout shifts, broken navigation, unresponsive buttons, and inconsistent font rendering. These bugs often appear in legacy browsers or when using experimental HTML, CSS, or third-party scripts.

-

Use a test automation framework like Selenium, Cypress, or Playwright integrated with a browser cloud platform. Run tests across real devices and browsers. Include visual regression checks, use analytics to prioritise browser coverage, and test critical user journeys. Integrate your cross-browser tests into your CI/CD pipeline for faster feedback.

-

Different browsers use different rendering engines and JavaScript interpreters. Without testing, you risk broken layouts, inaccessible features, or failed user actions. Cross-browser testing ensures consistent performance, protects conversions, and improves accessibility. It also helps teams identify browser-specific bugs before they reach production environments or real users.

-

Yes. Without cross-browser testing, your website may work in one browser but fail in others. It ensures UI consistency, functional accuracy, and accessibility across browser types, versions, and operating systems. It helps QA teams catch rendering issues, script failures, and compatibility bugs before users experience them.

-

Some cross-browser testing tools also support mobile testing by offering emulators, simulators, or real device clouds. They help validate how web apps render on mobile browsers across Android and iOS. For native mobile app testing, tools like Appium or platform-specific mobile automation solutions are more appropriate.

-

Test Evolve Spark enables automated cross-browser testing across Chrome, Firefox, Safari, and Edge. It supports JavaScript, TypeScript, and Ruby, integrating with Selenium WebDriver and Cucumber. Features include visual regression testing, accessibility audits, and real-time reporting via the Halo dashboard, facilitating efficient and comprehensive test coverage.

-

Test Evolve Spark offers a unified platform combining functional, visual, and accessibility testing. Its integration with tools like Applitools, Percy, and BrowserStack, along with support for multiple programming languages, provides flexibility. The Flare Studio and Halo dashboard enhance test management and reporting capabilities.

-

Yes, Test Evolve Spark integrates seamlessly with Selenium WebDriver for web testing and Appium for mobile testing. It supports behaviour-driven development using Cucumber and accommodates JavaScript, TypeScript, and Ruby, enabling comprehensive end-to-end testing across various platforms and devices.

-

Test Evolve Spark automates functional, visual regression, accessibility, API, and mobile tests. It supports testing for web applications, mobile apps, APIs, and desktop applications, providing a versatile solution for comprehensive test automation needs.

-

Test Evolve Spark incorporates visual regression testing by capturing and comparing screenshots to detect UI anomalies. It integrates with tools like Applitools and Percy for advanced visual checks and provides detailed reports through the Halo dashboard, aiding in identifying and addressing visual discrepancies effectively.