Performance Testing: Importance, Approaches, and Best Practices

What is Performance Testing?

Performance Testing is an integral part of software testing that assesses the system's performance under varied scenarios. Factors such as stability, scalability, speed and responsiveness are evaluated while considering different levels of traffic and load.

Regardless of the type of application you’re developing, it is essential to put it through a performance test to make sure that it functions the way you expect, regardless of the hardware or software environment. When assessing the application's performance, it is essential to check if it operates quickly and all visuals, text boxes, and other components are as expected, can accommodate multiple users when required and keeps memory usage to a minimum.

Let us delve further into how to execute such tests, their importance, the various tests available in this domain, and some useful practices to simplify the process.

Why is Performance Testing Necessary?

Application Performance Testing, or Performance Testing for short, is vital in guaranteeing that your software meets performance requirements. For instance, you can use performance testing to verify whether an application can handle thousands of users logging in simultaneously or executing different actions concurrently. Through such tests, you can pinpoint and eliminate any performance bottlenecks within the application.

Different Approaches to Performance Testing

There are numerous types of software performance testing used to assess a system's readiness:

Load Testing: is a type of performance testing which measures how a system behaves under different load levels. It evaluates a system's response time and stability when the amount of user operations or simultaneous users running transactions, increases.

Stress Testing: AKA Fatigue testing is an assessment of a system's capacity to perform under unlikely circumstances (200% expected capacity for example). It allows us to detect when the software fails and starts breaking down, thus enabling us to prevent potential malfunctions in extreme situations.

Spike Testing: The software's performance should be evaluated regularly when exposed to high traffic and usage levels over brief periods of time.

Endurance Testing: So-called "soak testing" is a useful method for evaluating software performance under typical load conditions over long durations (24 to 76 hours for example). Its primary focus is identifying common problems, such as memory leakages, that can cause malfunctions.

Scalability Testing: Performance testing evaluates how well a software application can handle growing workloads and maintain stability. This is done by gradually increasing the load to observe system responses or by keeping the load stable and trying out different variables like memory and bandwidth.

KPIs for Performance Testing

Assessing the results from system performance testing can be effectively done through the utilisation of corresponding metrics or Key Performance Indicators (KPIs) such as:

Memory: The amount of RAM a system requires or utilises when it is processing data and executing an action.

Latency/Response Time: The latency between an incoming user request and the start of the system response is an essential factor to consider.

Throughput: The amount of data processed by the system over a given period of time.

Bandwidth: The data transmission capacity of one or more networks per second is considerable.

CPU interrupts per second: Hardware interruptions a system encounters while processing data.

Speed: The speed at which a webpage containing multiple elements like text, video, images, etc., loads

| Performance Testing KPIs | Definition |

|---|---|

| Response Time | The time it takes for the system to respond to a user request. |

| Throughput | The number of requests that the system can handle per unit of time. |

| Concurrent Users | The number of users that can use the system simultaneously without significantly impacting its performance. |

| Error Rate | The percentage of requests that fail or generate errors. |

| CPU Usage | The percentage of CPU resources used by the system during the test. |

| Memory Usage | The amount of memory used by the system during the test. |

| Network Usage | The ability of the system to handle increasing levels of traffic without degrading performance. |

| Scalability | The amount of network bandwidth used by the system during the test. |

| Availability | The percentage of time that the system is available and responsive to user requests. |

| Peak Response Time | The maximum time it takes for the system to respond to a user request during peak usage. |

| Transaction Throughput | The number of successful transactions per unit of time. |

| Error Rate by Type | The percentage of requests that fail or generate errors, broken down by error type. |

| Resource Utilization | The percentage of resources used by the system during the test, such as disk I/O, database connections, or external services. |

| User Satisfaction | A subjective measure of how satisfied users are with the system's performance, typically gathered through surveys or feedback forms. |

| Time to First Byte (TTFB) | The amount of time it takes for the server to send the first byte of data in response to a user request. |

| Page Load Time | The time it takes for a web page to fully load and become interactive. |

| Latency | The time it takes for a packet of data to travel from the user's device to the server and back. |

| Peak User Load | The maximum number of concurrent users the system can handle before performance degrades. |

| Peak User Load | The amount of network bandwidth used by the system during the test. |

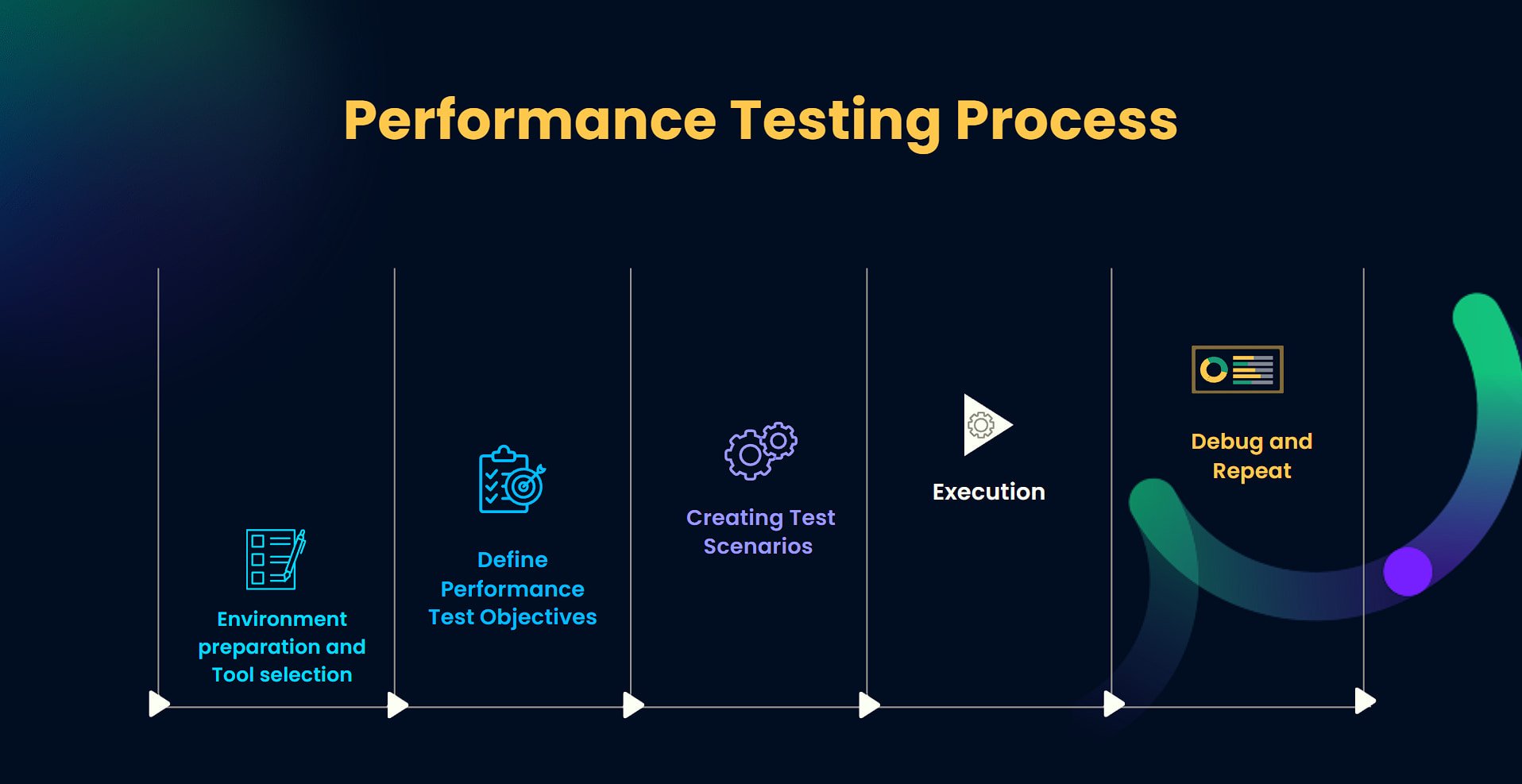

Performance Testing: A Step-by-Step Guide

Establishing the Right Test Environment and Tools

It is crucial to identify a test environment that accurately represents the intended production environment. Pertinent specifications and configurations – such as hardware and software – must be documented to ensure close replication. It is vital to avoid running tests in production environments without appropriate safeguards to prevent disruptions to the user experience.

One way to test with realistic user conditions is by conducting performance tests on real browsers and devices. Rather than grappling with the many deficiencies of emulators and simulators, testers can use a real device cloud that provides real devices, browsers, and operating systems on demand for rapid testing.

By executing tests on an actual device cloud, Quality Assurance (QA) professionals can guarantee they obtain precise results every time.

Comprehensive and error-free testing assures that no significant bugs go unnoticed and that software offers the best possible user experience.

Define Performance Test Objectives

Having well-defined goals and standards for assessing the performance of an application is essential. Consider what your app/product requires and establish relevant objectives. Your QA team can help you develop suitable metrics, thresholds, and constraints regarding the system's effectiveness.

Creating Test Scenarios

Constructing multiple tests to examine the software's performance in different scenarios is essential. The aim should be to define tests that cover different use cases. Automating the tests can prove advantageous as it is time-effective and consistently repeatable.

Execution

Execute your tests across various phases (Load, Soak, Stress) and ensure you have the necessary monitoring in place to pinpoint any failures or performance bottlenecks in line with known load conditions.

Debug and Repeat

After evaluating the tests and logging any bugs, share the results to all team members. Following the resolution of any performance issues, run your performance tests again to assess the absence of bottlenecks and improvement in application performance following the fixes.

Best Practices when Undertaking Performance Testing

Define clear performance goals: Before starting performance testing, it is important to have a clear idea of your desired outcome. Define achievable goals and objectives, establish relevant Key Performance Indicators (KPIs) and set realistic targets relating to them. Regarding essential KPIs, response time, throughput, resource utilisation and error rates are highly recommended to be monitored.

Test early and often: It is good practice to test as much and as often as possible throughout the development lifecycle to detect as many performance issues and bugs as possible. Removing bottlenecks early on will improve your product’s performance, cut costs for fixing bugs later on and facilitate better scalability in the long run.

Simulate realistic user behaviour: User scenarios should be as realistic as possible for the simulations to be close or even identical to real-life situations. Testing for performance should include scenarios such as simultaneous logins, peak traffic, and cross-browser/cross-device flooding.

Use appropriate testing tools: After evaluating and choosing your corresponding KPIs, you should also assess the market for a proper testing tool based on your product’s requirements, budget and your team’s technical expertise. This can make a huge difference as there is no one-size-fits-all tool out there that will suit every project. Choose yours wisely!

Monitor and analyse results: Last but not least, monitoring your performance and learning from your test results is crucial. Compare your metrics and stats before and after every test to see how your product performs and improves after debugging and re-testing. You can always fine-tune and improve your app after addressing crucial issues that allow it to function properly and give your users a good experience.

Conclusion

In conclusion, performance testing is crucial for any software system to ensure it performs optimally for the end user and support teams. It helps to identify and fix performance issues before they become major problems and ultimately impact users’ experience.

Testing and Development teams can proactively maintain and enhance the system's performance and avoid potential downtime or service disruptions by monitoring and optimising the system.

Investing in performance testing helps to ensure improved user satisfaction, a reduction in support costs, and an increase in revenue.

In today's competitive market, user experience is paramount, and real-time performance testing is necessary to deliver exceptional software that meets users' needs.